Summary

Since its launch in November 2022, ChatGPT has become the emblem of AI in the eyes of the general public. Extremely popular, it was estimated to have more than 170 million users active users by April 2023.

Last January, this number was 100 million. OpenAI's website, the company behind this technology, receives around 1.5 billion visits each month.

Needless to say, the conversational agent has created huge buzz, even among professionals. But as with all AI-based services, its use raises a number of questions, particularly in terms of ethics and information security:

- How to integrate and use ChatGPT

- What are its uses?

- What are the risks?

- Is there a specific legal framework?

Let's start by defining the tool provided by OpenAI.

What does "ChatGPT" mean and what is it?

ChatGPT is an artificial intelligence model with the capacity to not only understand but also generate natural language. This computer program developed by the company OpenAI takes the form of a conversational agent which the user can interact with in writing using a command or request called "prompt", hence the name ChatGPT.

- "Chat" for the conversational interface which allows interaction with the tool

- "GPT" for "Generative Pre-trained Transformer". This transformer is the engine, so to speak, which allows the machine to generate content according to the requests submitted. In order to do so, it has been trained on a gigantic corpus of data from the web. This corpus is estimated to be 300 billion words (at least).

Using ChatGPT in business

The success of the chatbot can be attributed to its excellent performance in terms of text generation. The tool is effectively capable of "understanding" and generating structured content without even the slightest spelling or grammar error. Below are some examples of how ChatGPT can be used in business:

- Creating advertising and marketing content.

- Automating the production of message templates for sales prospecting

- Sentiment analysis through text to analyse CVs, transcriptions of conversations with clients, etc.

- Generating content for support teams (articles, guides, FAQs, etc.).

- Synthesizing information (a summary of meeting transcriptions, articles or other texts).

- Translation into multiple languages. Currently, ChatGPT supports around 50 languages.

- Code generation or correction to accelerate development processes

- Content moderation

What's more, you can also integrate the ChatGPT API into your client-facing tools and products. Overall, the OpenAI solution can be used to alleviate writer's block or help you with tedious and time-consuming tasks such as translating or correcting lines of code. However, as powerful as ChatGPT may be, it is not all-knowing and cannot do everything, especially as there are risks involved.

What are the risks of using ChatGPT in your organization?

At the time of writing this article, ChatGPT has only been used on a large scale for a few months. That's all it took for cyber criminals to use it fraudulently, even dangerously in some cases.

In fact, chatbots can be misused to generate content on a large scale for the mass generation of false information, spam, or fake reviews on the internet. But the risk of misinformation is not the only downside which businesses need to protect themselves against.

Confidentiality and data security

What happens if an employee shares sensitive data with ChatGPT when interacting with the AI?

OpenAI offers two ways of using ChatGPT:

- Through their web or mobile app

- Through their API

The company is keen to reassure users, explaining that its models are not trained on data submitted through its web and IOS apps [supposedly Android, too] when chat history is disabled.

The same goes for data submitted through the API after March 1, 2023 (unless you have specifically chosen this option). Theoretically, if you do not want OpenAI to share your data or use it to train its models, you can simply disable chat history in the ChatGPT app settings and check in the API settings that you are not sharing your data.

Note that you can use the API to train ChatGPT yourself to complete more specific tasks for your organization based on data gathered by your organization.

Again, OpenAI emphasises that the resulting data and new models are not used by the company nor by other organizations. Only your organization can access, modify or delete them. Nevertheless, OpenAI retains ownership of these so-called "fine-tuned" models.

Finally, the company states that it is possible to activate Zero Data Retention so that data that is both input and generated are not stored. However, the company explains that you may be asked to meet additional requirements.

Which are? It does not mention this in its latest privacy policy for its API from July 21, 2023. Note that when ZDR is activated, even if the data is not stored, it can be processed by OpenAI's security classifiers.

Note that OpenAI now offers a form so you can request that the company deletes data about you in compliance with the right to be forgotten, article 17 of the GDPR (General Data Protection Regulation).

What about copyright and intellectual property?

At the time of writing this article, it is impossible to know if the responses generated by AI are derived from content protected by copyright.

In fact, AI does not cite its sources. Note that as a tool, ChatGPT is not a person and therefore it cannot claim copyright. OpenAI protects itself from possible abuses by explaining that content co-created with the AI can only be attributed to the person or business that co-created it and wishes to publish it.

It is therefore the user's responsibility to ensure that the responses provided do not infringe upon intellectual property rights.

Biases

Reducing biases is unquestionably one of the greatest challenges of generative AI. Even is significant progress has been made, it is clear that ChatGPT and its equivalents still have huge shortcomings in this area.

Indeed, AI models don't always have the capacity to grasp the nuances of human opinions. Furthermore, they may reflect opinions shared in content that serves as their corpus and lose neutrality in their responses.

Beware of approximate or false responses

Being well-structured and free from spelling errors, ChatGPT's responses seem entirely plausible.

Nevertheless, upon closer inspection, ChatGPT and its associates can get confused and give approximate answers, particularly when it comes to recent news or specific subjects.

Incorrect responses can also be caused by prompts that are too short, ambiguous and lack sufficient context. In many cases, ChatGPT is unable to respond correctly simply because it does not have access to this data, as it has not been trained on a sufficiently relevant corpus for this question.

What is the legal framework for ChatGPT?

Like all businesses likely to handle personal data, ChatGPT is subject to regulations such as GDPR, the CCPA (California Consumer Privacy Act) and even HIPAA (Health Insurance Portability and Accountability Act), to name but a few. However, artificial intelligence technologies evolve more rapidly than laws. In April 2021, Europe laid the first foundations on its AI regulatory framework by issuing rules on different levels of risk (high, unacceptable, limited). However, when it comes to generative AI, for now, the framework is rather light. The European Parliament stipulates that it must comply with transparency requirements:

- "Disclosing that the content was generated by AI

- Designing the model to prevent it from generating illegal content

- Publishing summaries of copyrighted data used for training"

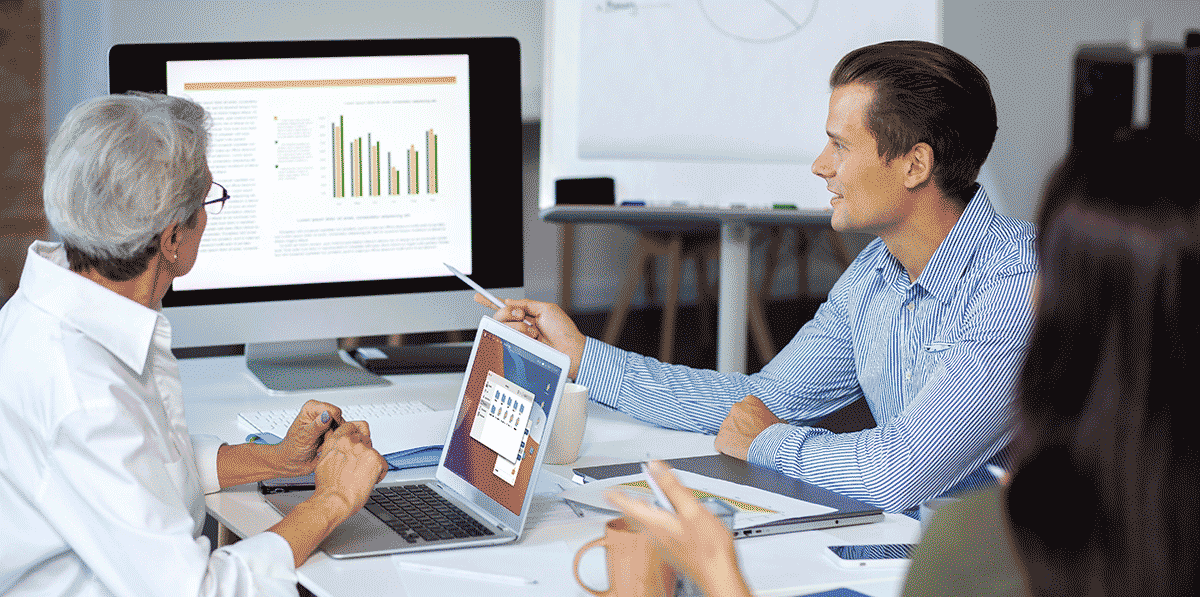

How can you integrate ChatGPT into your organization?

As we saw earlier in this article, ChatGPT is accessible to professionals in different forms.

Measures to be put in place

Whether you choose the app or the API, you will need to adopt a meticulous approach to successfully implementing it within your teams and overseeing its use. Here are some tips to help you successfully integrate ChatGPT:

- Set clear boundaries: It is crucial to define the scope of ChatGPT and the instances in which you will be using the tool. Which departments of your organization are affected? What will you use ChatGPT for? For translation, analysis, article creation?

- To maximise the potential of OpenAI's solution, and protect your data, using the API is recommended. With the API you can train ChatGPT yourself, in an isolated environment, providing it with the corpus of your choice (documents, client interactions, support articles, contracts, etc.).

- Following this training phase, you will need to set up your safeguards by establishing explicit guidelines on the questions and themes that ChatGPT can and cannot deal with, to avoid potential problems in terms of ethics and privacy.

- Finally, involve all your employees to gather as much feedback as possible and continually refine the model.

And why not use ChatGPT indirectly?

OpenAI's solution has been integrated into a large number of apps and extensions for individuals and businesses. It is not unusual to find some of these functions in third-party solutions.

This is the case for Empower, Ringover's conversational analysis solution, which uses the API to summarise call transcriptions. This means you can also access key information from each interaction with your clients, prospects or partners without having to re-listen to the entire call.

Take a look at the other Empower features:

FAQs: Chat GPT Business

Is ChatGPT reliable?

Responses from ChatGPT's app are not always reliable since, despite its immense knowledge base, the tool is not all-knowing, but has rather been trained on a corpus whose most recent elements are from 2021.

The tool can respond inaccurately or inappropriately in some instances. The quality of responses depends above all on the corpus on which the model is trained. This means if you train ChatGPT on your own data through the API, you must make sure to feed it reliable information which corresponds with your business' values.

Is there a risk with ChatGPT?

There is absolutely a risk associated with ChatGPT, as with most generative AI apps. In fact, despite safeguards having been put in place and numerous updates, ChatGPT can be misused to generate misleading or inaccurate information. Biases are also possible which could have serious consequences, including on a large scale in areas such as health, politics and finance. Please note that in some cases, artificial intelligence is not able to differentiate between private and public information, posing a privacy risk. That is why it is essential to carefully control the integration of ChatGPT and ensure that the model is trained on relevant, reliable data which respects the company's values and policies.

Published on November 13, 2023.